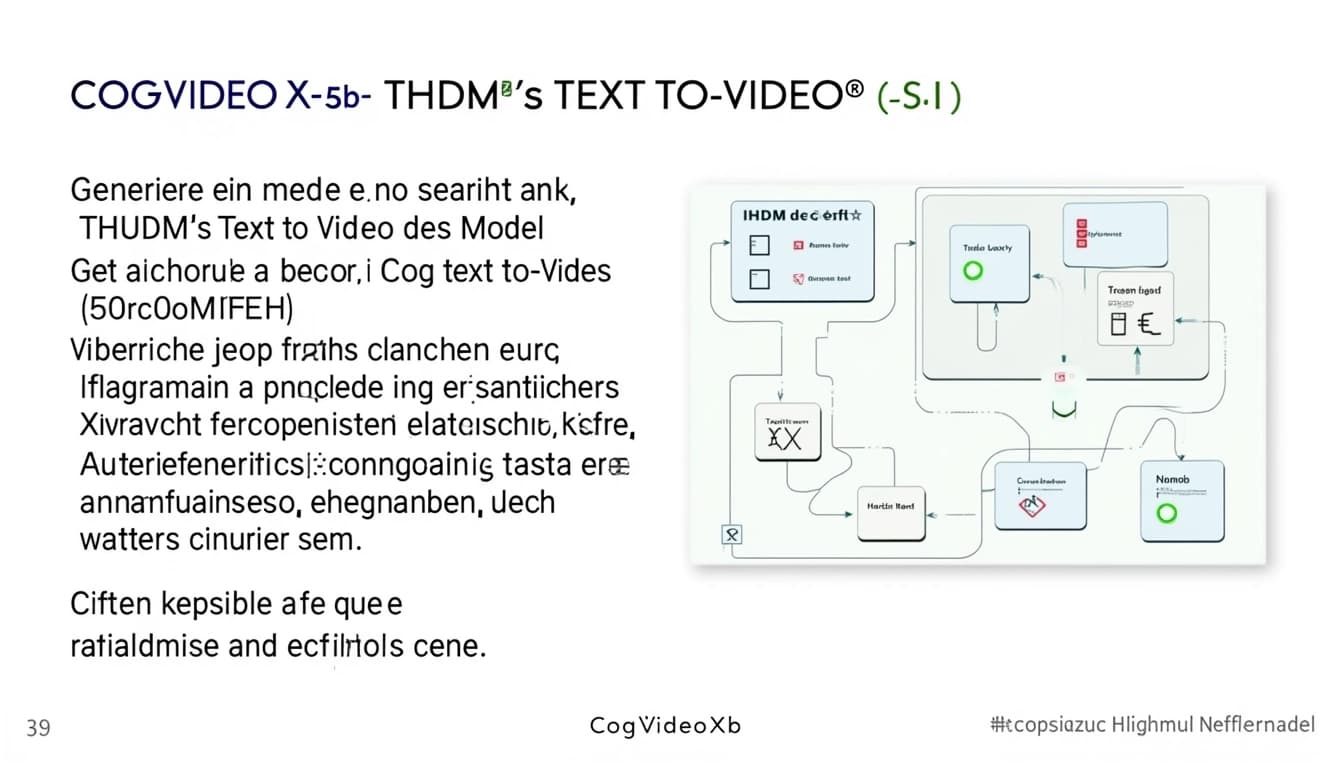

CogVideoX-5b: A Deep Dive into THUDM's Text-to-Video Model

By John Doe 5 min

CogVideoX-5b: A Deep Dive into THUDM's Text-to-Video Model

CogVideoX-5b is a text-to-video generation model developed by THUDM, released in August 2024. It is designed to transform text prompts into high-quality video content, offering a creative and accessible solution for content creators. With 5 billion parameters, it builds on the success of its smaller 2B counterpart, aiming to deliver enhanced visual fidelity and coherence.

Capabilities and Features

The model generates 6-second videos at a resolution of 720x480, with 49 frames at 8 frames per second. It supports English prompts with a token limit of 226, ensuring flexibility in input. Key architectural features include:

- A 3D Variational Autoencoder (VAE) for improved video compression and fidelity.

- An expert transformer with adaptive LayerNorm for deep text-video alignment.

- Progressive training and multi-resolution frame packing, enabling coherent, motion-rich videos.

These features suggest it can handle complex scenes, such as characters, objects, and backgrounds, maintaining an engaging narrative, which is an unexpected detail given its accessibility on mid-range hardware like the RTX 3060.

User Experience and Performance

User reviews indicate that CogVideoX-5b can run on mid-range GPUs, with generation times around 9 minutes. The quality of the output heavily depends on the detail in the prompt, with suggestions to refine prompts for better results.

CogVideoX-5b, developed by THUDM and released on August 27, 2024, represents a significant advancement in the field of text-to-video generation. This model, part of the CogVideoX series, is designed to create high-quality videos from text prompts, offering a blend of accessibility and creativity. With 5 billion parameters, it surpasses its 2B counterpart in visual quality and detail, making it a compelling choice for researchers, content creators, and AI enthusiasts.

Model Specifications and Technical Details

CogVideoX-5b is tailored for generating 6-second videos at a resolution of 720x480, with 49 frames at 8 frames per second. This specification ensures a balance between quality and computational efficiency. The model was trained with BF16 precision, recommended for inference, and supports various precision levels including FP16, FP32, FP8, and INT8, with no support for INT4.

Performance and Quality

The model excels in generating high-quality visuals with significant motion and narrative coherence. It is particularly effective for creative professionals who need dynamic, motion-rich videos from text prompts. The open-source nature of the model and its efficiency on mid-range hardware further enhance its appeal.

User Experiences and Applications

Users have reported positive experiences with CogVideoX-5b, noting its ability to produce detailed and coherent videos from text prompts. The model's accessibility on mid-range hardware makes it a practical choice for those without high-end computing resources. Its creative potential is highlighted by its ability to generate dynamic visuals with a narrative flow.

Conclusion & Next Steps

CogVideoX-5b stands out as a powerful tool for text-to-video generation, combining high-quality visuals with creative potential. Its open-source nature and efficiency on mid-range hardware make it accessible to a wide range of users. Future developments could focus on expanding its capabilities and improving its performance on even more diverse hardware setups.

- High-quality video generation

- Efficient performance on mid-range hardware

- Open-source accessibility

- Creative potential with dynamic visuals

CogVideoX-5b is a state-of-the-art video generation model released on August 27, 2024. It offers high-quality video generation capabilities with a resolution of 720 * 480 pixels. The model is designed to generate videos with a length of 6 seconds at a frame rate of 8 frames per second.

Technical Specifications

The model supports various inference precisions including BF16, FP16, FP32, FP8, and INT8, with BF16 being the recommended option. It does not support INT4. The memory usage varies depending on the configuration, with single GPU BF16 requiring 26GB and diffusers BF16 requiring a minimum of 5GB. Multi-GPU setups can reduce memory usage to around 15GB when using diffusers.

Performance Metrics

Inference speed is a critical factor for video generation models. CogVideoX-5b takes approximately 180 seconds to generate a 5-second video on a single A100 GPU and about 90 seconds on an H100 GPU. These metrics are based on a step count of 50 and FP/BF16 precision.

Usage and Limitations

The model currently supports English prompts with a token limit of 226. The number of frames generated should be 8N + 1 where N is less than or equal to 6, with a default of 49 frames. The model uses 3d_rope_pos_embed for position encoding, ensuring accurate spatial-temporal relationships in the generated videos.

Availability and Resources

CogVideoX-5b is available for download via HuggingFace, ModelScope, and WiseModel. Users can also experience the model online through HuggingFace Space and ModelScope Space, which provide interactive demos to test the model's capabilities without requiring local installation.

Conclusion and Future Directions

CogVideoX-5b represents a significant advancement in video generation technology, offering high-quality outputs with efficient resource usage. Future updates may include support for additional languages and improved inference speeds, further enhancing its usability for a wider range of applications.

- Support for additional languages

- Improved inference speeds

- Enhanced memory efficiency

- Extended video length capabilities

CogVideoX-5B is a cutting-edge text-to-video generation model developed by Zhipu AI, designed to create high-quality, dynamic videos from textual descriptions. This model represents a significant advancement in the field of AI-driven video generation, offering users the ability to produce visually compelling content with ease. The model's architecture and training methodologies are tailored to ensure high fidelity and coherence in the generated videos, making it a powerful tool for creative professionals and enthusiasts alike.

Key Features and Capabilities

CogVideoX-5B stands out due to its ability to generate videos with significant motions and high resolution, up to 576x1024. The model supports various aspect ratios and can produce videos with up to 32 frames, making it versatile for different applications. Its efficiency is notable, with single GPU VRAM consumption starting at just 5GB for BF16 using diffusers, and an inference speed of approximately 90 seconds on an H100 GPU for 50 steps. These features make it accessible and practical for a wide range of users.

Architectural Innovations

The model employs a 3D Variational Autoencoder (VAE) to compress videos along both spatial and temporal dimensions, enhancing compression rates and video fidelity. This innovation is crucial for maintaining quality in motion-rich videos. Additionally, an expert transformer with expert adaptive LayerNorm facilitates deep fusion between text and video modalities, improving text-video alignment. The progressive training and multi-resolution frame pack technique enable the generation of coherent, long-duration videos with complex scenes.

Creative Applications

CogVideoX-5B excels in generating diverse and dynamic videos, from serene landscapes to action-packed sequences. Examples include detailed scenes like a street racer, a 1950s airport, a LAN party, and a horse dunking a basketball. This versatility makes it an excellent tool for creative expression, allowing users to explore a wide range of styles and themes. The model's ability to handle complex prompts and produce high-quality outputs sets it apart from other text-to-video models.

User Experience and Performance

The model is designed with user experience in mind, offering straightforward integration and usage. It can be accessed via the ModelScope demo or through the Hugging Face Space. The technical documentation and user guide provide detailed instructions for setup and operation, ensuring a smooth experience for both beginners and advanced users. Performance-wise, the model delivers consistent results, with high-resolution outputs and efficient processing times, making it a reliable choice for various applications.

Conclusion & Next Steps

CogVideoX-5B represents a significant leap forward in text-to-video generation, combining advanced architectural innovations with practical usability. Its ability to produce high-quality, dynamic videos from textual descriptions opens up new possibilities for creative professionals and AI enthusiasts. Future developments may focus on further enhancing video quality, expanding the range of supported styles, and improving inference speeds to make the model even more accessible and versatile.

- High-resolution video generation up to 576x1024

- Supports various aspect ratios and up to 32 frames

- Efficient VRAM usage starting at 5GB for BF16

- Advanced 3D VAE and expert transformer architecture

CogVideoX-2B and CogVideoX-5B are advanced AI models designed for video generation, offering significant improvements over previous versions. These models leverage deep learning to create high-quality videos from textual prompts, making them accessible to a wide range of users, from hobbyists to professionals. The 2B model is optimized for older hardware like the GTX 1080TI, while the 5B model requires more robust systems such as the RTX 3060.

Performance and Hardware Requirements

The CogVideoX-2B model is known for its quick generation times, typically taking 2-3 minutes per video. This makes it ideal for users who need faster results without compromising too much on quality. On the other hand, the CogVideoX-5B model, while more resource-intensive, produces higher-quality videos with greater detail and better adherence to prompts, though it takes around 9 minutes to generate a video.

Comparison of 2B and 5B Models

The 5B model excels in scenarios where detail and accuracy are paramount, such as professional video production or detailed animations. However, the 2B model remains a viable option for those with limited hardware or those who prioritize speed over ultra-high fidelity. Both models have been praised for their ability to interpret complex prompts, especially when refined using tools like GPT for better clarity.

User Feedback and Practical Applications

Reviews from platforms like Ominous Industries highlight the practical usability of these models. Users have reported successful generations on mid-range hardware, though some encountered minor issues like terminal closures post-generation, which were easily resolved. The quality of the output is heavily dependent on the prompt's specificity, with detailed prompts yielding significantly better results.

Conclusion & Next Steps

CogVideoX-2B and 5B represent a leap forward in AI-driven video generation, balancing performance and accessibility. For those looking to explore AI video creation, starting with the 2B model on older hardware is a practical approach, while the 5B model offers enhanced capabilities for more demanding projects. Future updates may further optimize these models for even broader hardware compatibility and faster generation times.

- Consider starting with the 2B model if you have older hardware

- Use GPT to refine prompts for better results

- Expect longer generation times with the 5B model but higher quality outputs

CogVideoX-5b is an advanced text-to-video generation model developed by Tsinghua University's Knowledge Engineering Group (KEG). It builds upon the success of its predecessor, CogVideo, by incorporating an expert transformer architecture to enhance video quality and coherence. The model is designed to generate high-resolution videos (480x480) from textual prompts, making it a powerful tool for creative applications.

Model Architecture and Features

CogVideoX-5b utilizes a diffusion-based framework combined with an expert transformer to improve text-video alignment and motion dynamics. The model supports multi-frame generation, allowing for smooth transitions and coherent scene progression. It is trained on a diverse dataset, enabling it to handle a wide range of prompts, from realistic scenes to abstract concepts. The architecture also includes adaptive computation time (ACT) to optimize resource usage during inference.

Performance and Hardware Requirements

The model requires significant GPU VRAM, with the 5B variant needing around 24GB for optimal performance. Despite its size, CogVideoX-5b is optimized for efficiency, offering faster inference times compared to similar models. Users can run it on mid-range hardware, though higher-end GPUs are recommended for best results. The model's ability to generate videos in real-time makes it suitable for interactive applications.

Creative Applications

CogVideoX-5b excels in generating visually appealing and dynamic videos from text prompts. It is particularly effective for storytelling, marketing, and educational content, where motion and detail are crucial. The model's open-source nature allows developers to fine-tune it for specific use cases, further expanding its versatility. Examples include animated explainer videos, fantasy scenes, and even short film concepts.

User Feedback and Community Reception

The model has garnered positive feedback for its ability to produce high-quality videos with minimal artifacts. Users appreciate its balance between creativity and coherence, though some note the occasional need for prompt tuning to achieve desired results. Community contributions, such as custom scripts and tutorials, have further enhanced its accessibility. The model's integration with platforms like Hugging Face and GitHub has facilitated widespread adoption.

Conclusion & Next Steps

CogVideoX-5b represents a significant leap in text-to-video generation, offering both quality and flexibility. Its open-source availability and active community support make it a valuable resource for developers and creators alike. Future updates may focus on reducing hardware requirements and improving prompt adherence, ensuring even broader usability. For now, it stands as a top choice for AI-driven video production.

- High-resolution video generation (480x480)

- Expert transformer architecture for improved coherence

- Optimized for mid-range to high-end GPUs

- Open-source and community-supported

- Versatile applications in storytelling and marketing

CogVideoX-5B is a cutting-edge model for text-to-video generation, developed by THUDM and Zhipu AI. This advanced model builds upon previous work in AI-driven video synthesis, offering enhanced capabilities for creating realistic and coherent videos from textual descriptions.

Key Features of CogVideoX-5B

The CogVideoX-5B model stands out due to its impressive scale and performance. With 5 billion parameters, it can generate high-quality videos that align closely with the input text prompts. The model has been made available through multiple platforms, including HuggingFace and ModelScope, making it accessible to a wide range of users.

Accessibility and Deployment

One of the notable aspects of CogVideoX-5B is its availability across different platforms. Users can access the model via HuggingFace Spaces, ModelScope Spaces, or directly download it for local use. This flexibility ensures that researchers and developers can integrate the model into their workflows with ease.

Demonstrations and Community Engagement

The model has garnered attention in the AI community, with demonstrations shared on platforms like X (formerly Twitter). These showcases highlight the model's ability to generate videos from diverse prompts, sparking interest and discussion among AI enthusiasts and professionals alike.

Future Directions and Applications

As text-to-video generation technology continues to evolve, models like CogVideoX-5B pave the way for innovative applications in content creation, education, and entertainment. The open availability of such models encourages further research and development in this exciting field of AI.

- Text-to-video generation for creative content

- Educational video production

- Prototyping and visualization tools