ControlNet-Scribble: Transforming Sketches into Detailed Art with AI

By John Doe 5 min

ControlNet-Scribble: Transforming Sketches into Detailed Art with AI

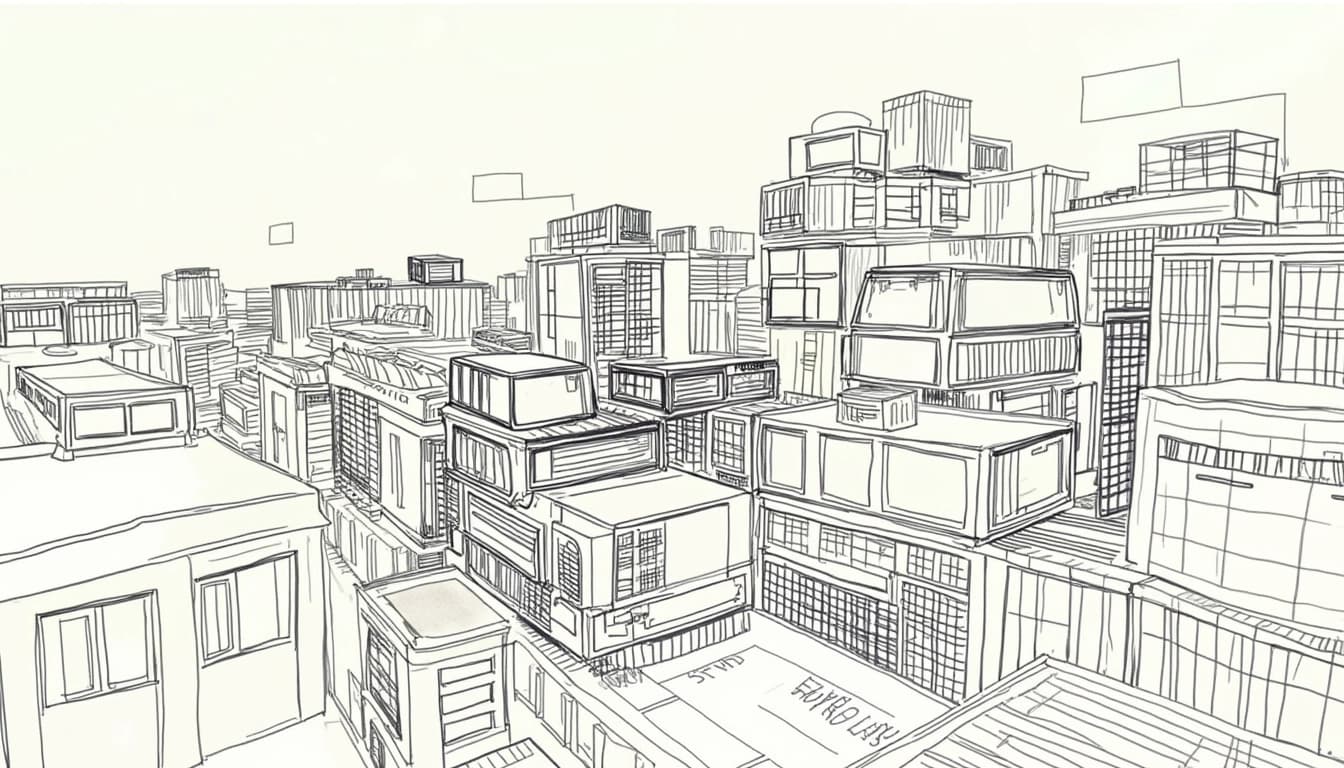

Research suggests controlnet-scribble transforms simple sketches into detailed art using AI, combining user drawings with text prompts.

Key Points

It seems likely that this tool, built on Stable Diffusion and ControlNet, enhances creativity by respecting the structure of scribbles.

The evidence leans toward its use in art, design, and education, though it has limitations like dependence on input quality.

Background and Technology

controlnet-scribble is an innovative AI tool that allows users to turn their rough sketches, or 'doodles,' into refined artworks, or 'masterpieces,' by leveraging the capabilities of Stable Diffusion and ControlNet. Stable Diffusion is a text-to-image model that generates images from text prompts, while ControlNet adds extra control by processing additional inputs like sketches. This combination enables controlnet-scribble to interpret a user's scribble and a text description to create a detailed image that aligns with both.

How It Works

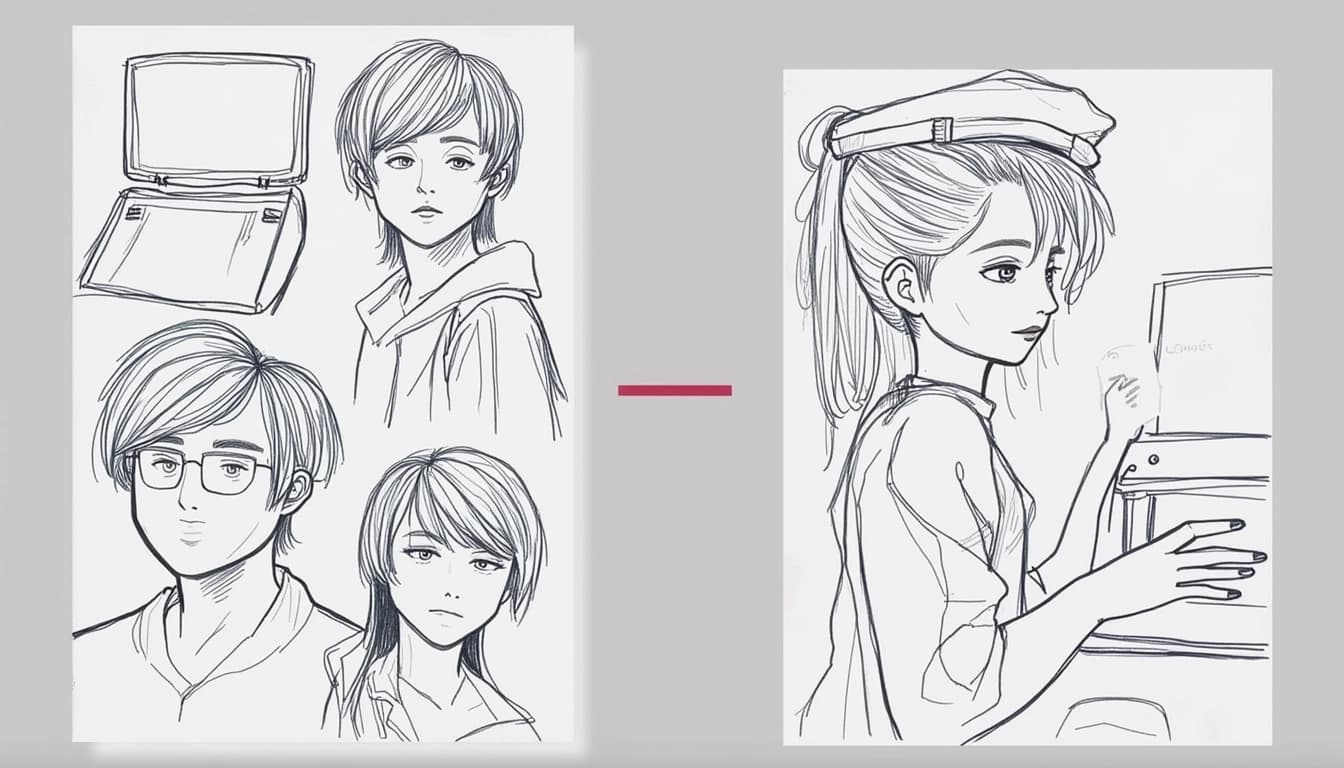

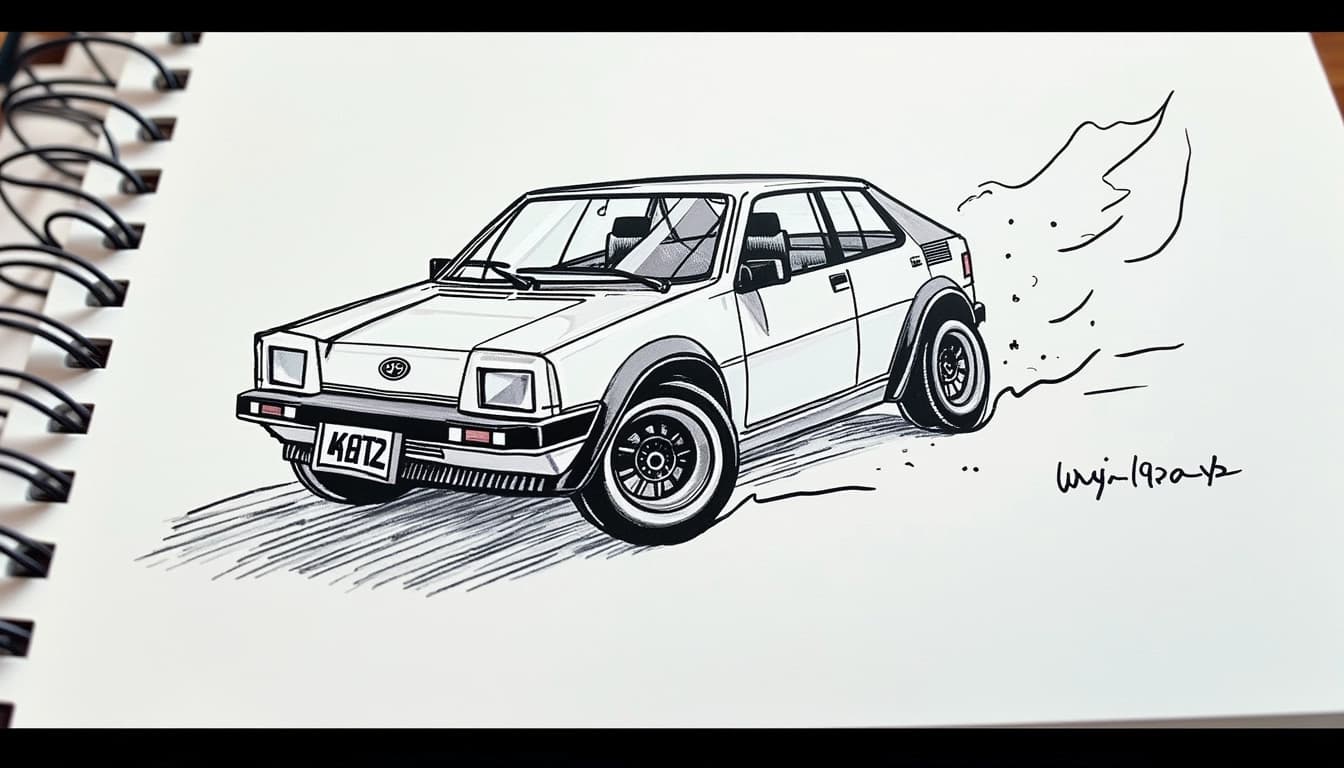

The process begins with the user drawing a simple sketch, which serves as a structural guide. They then provide a text prompt describing the desired style and details, such as 'a majestic oak tree in a forest.' The controlnet-scribble model processes the sketch through its ControlNet component, integrating it with the text prompt to generate an image that respects the sketch's layout while incorporating the described elements. This model was trained on images and their corresponding edge maps, modified to simulate user-drawn scribbles, enabling it to map from rough sketches to detailed visuals.

Applications and Examples

controlnet-scribble is versatile, finding use in art and design for turning concepts into reality, prototyping for rapid iteration, education for visualizing concepts, and storytelling for creating illustrations. For instance, a sketch of a tree with the prompt 'a majestic oak tree in a forest' results in a detailed image of an oak tree with sunlight filtering through.

In the dynamic intersection of creativity and artificial intelligence (AI), controlnet-scribble stands out as a transformative tool that turns simple doodles into artistic masterpieces. This survey note provides a comprehensive analysis of its technology, functionality, and implications, building on the key points and expanding into detailed insights for a deeper understanding.

Introduction to controlnet-scribble

controlnet-scribble is an AI-driven tool designed to enhance digital art creation by allowing users to input rough sketches, or scribbles, and combine them with text prompts to generate detailed images. This technology, rooted in the advancements of Stable Diffusion and ControlNet, democratizes art by bridging traditional sketching with AI-generated imagery, making it accessible to artists, designers, and hobbyists alike.

Technological Foundations

Stable Diffusion: The Base Model

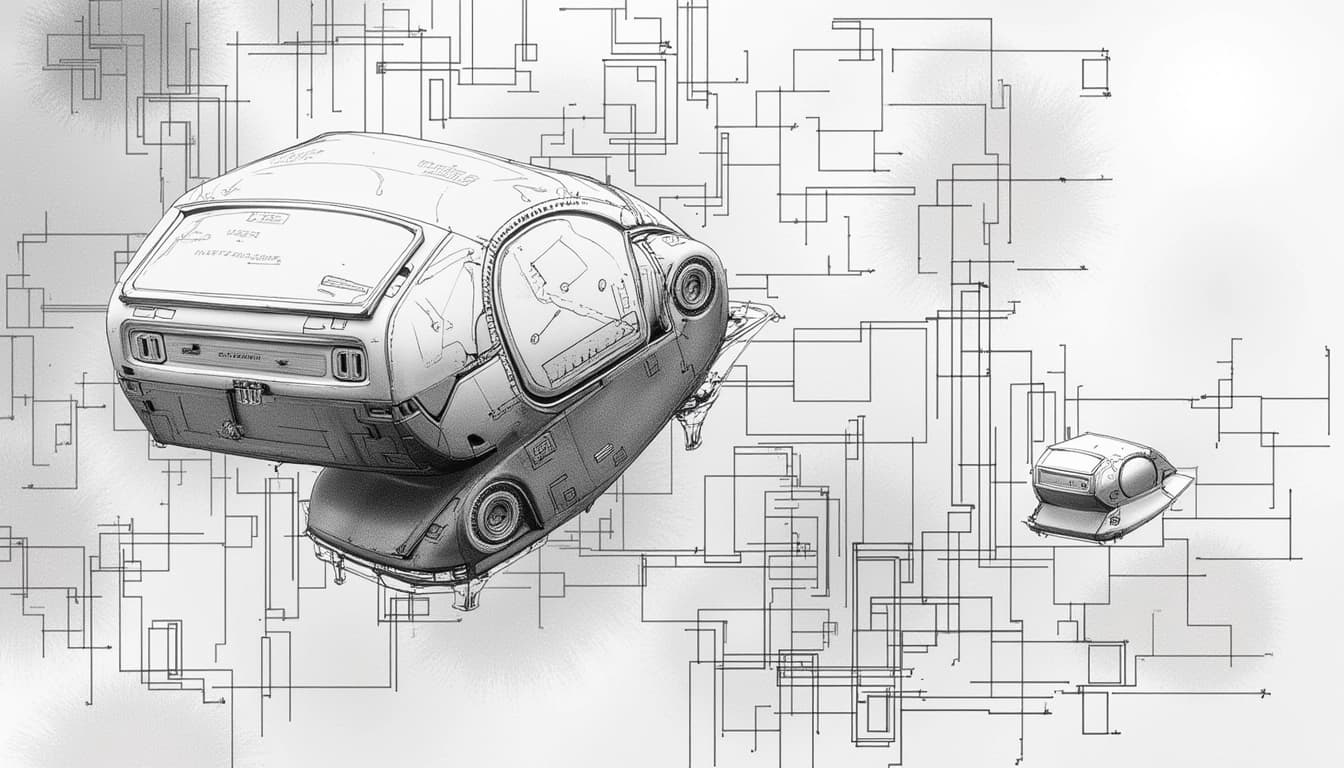

Stable Diffusion, developed by Stability AI, is a text-to-image generation model that uses a diffusion process to transform random noise into images based on textual descriptions. It has been widely adopted for its ability to produce high-quality visuals from prompts, revolutionizing digital art creation. For instance, a prompt like 'a serene lake at sunset' can generate a detailed landscape image, showcasing its versatility.

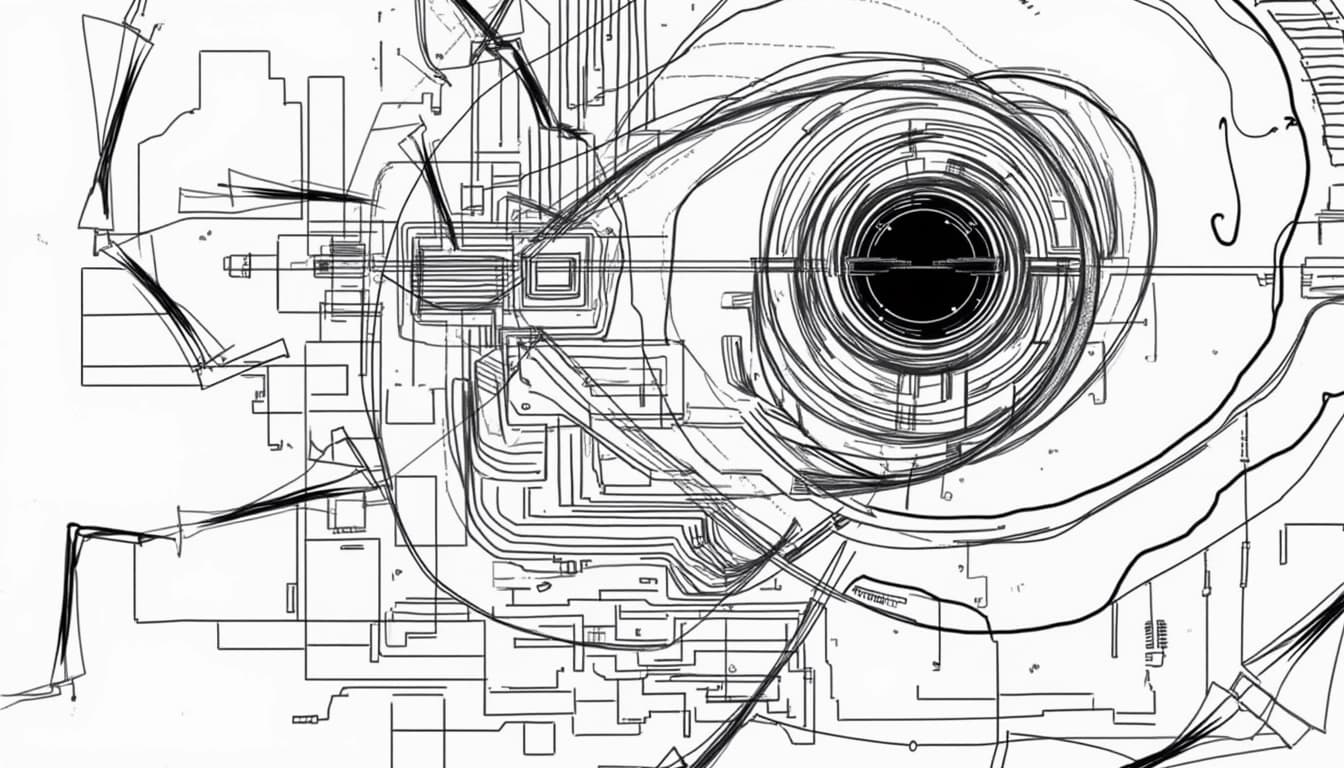

ControlNet: Adding Conditional Control

ControlNet extends the capabilities of Stable Diffusion by introducing conditional control mechanisms. This allows users to guide the image generation process more precisely, ensuring that the output aligns with specific artistic visions or requirements. By integrating scribbles as additional inputs, ControlNet enables a more interactive and controlled approach to AI-generated art.

Functionality and Use Cases

controlnet-scribble excels in transforming rough sketches into polished artworks. Users can draw basic outlines or shapes, and the AI fills in the details based on the provided text prompts. This functionality is particularly useful for concept artists, illustrators, and designers who want to quickly visualize ideas without spending hours on detailed drawings.

Limitations and Challenges

While controlnet-scribble offers impressive capabilities, it is not without limitations. The quality of the output heavily depends on the clarity of the input scribble and the specificity of the text prompt. Additionally, the tool may struggle with highly complex or abstract concepts that are not well-represented in its training data.

Conclusion & Next Steps

controlnet-scribble represents a significant leap forward in the fusion of AI and digital art. By enabling users to transform simple scribbles into detailed images, it opens up new possibilities for creative expression. Future developments could focus on improving the tool's ability to handle more complex inputs and expanding its versatility across different artistic styles and genres.

- Enhances digital art creation with AI

- Bridges traditional sketching and AI-generated imagery

- Accessible to artists, designers, and hobbyists

- Future improvements could focus on handling complex inputs

ControlNet-Scribble is a specialized version of the ControlNet model designed to transform rough sketches into detailed images using text prompts. This model is particularly useful for artists and designers who want to quickly visualize their ideas without needing advanced drawing skills. By leveraging the power of Stable Diffusion, ControlNet-Scribble can interpret simple scribbles and generate high-quality images based on the provided text descriptions.

How ControlNet-Scribble Works

ControlNet-Scribble operates by combining a user-drawn scribble with a text prompt to produce a detailed image. The model was trained on a dataset of 50,000 scribble-image pairs, which allows it to understand and interpret rough sketches effectively. This training process involved 150 GPU-hours using Nvidia A100 80G, building on the canny model as a base. The result is a model that can map from basic outlines to richly detailed images, guided by textual descriptions.

Training and Development

The training of ControlNet-Scribble focused on teaching the model to recognize and enhance rough sketches. By using a large dataset of scribble-image pairs, the model learned to infer detailed structures from minimal input. This process ensures that even the most basic sketches can be transformed into visually appealing images, making it a valuable tool for creative professionals and hobbyists alike.

Practical Usage and Examples

Using ControlNet-Scribble is straightforward and accessible to users with varying levels of technical expertise. The process typically involves three steps: drawing a scribble, providing a text prompt, and generating the image. For instance, a simple outline of a tree combined with the prompt 'a majestic oak tree in a forest' can result in a detailed and realistic image of an oak tree with sunlight filtering through its leaves.

Applications and Use Cases

ControlNet-Scribble has a wide range of applications, from concept art and storyboarding to educational tools and creative exploration. Artists can use it to quickly prototype ideas, while educators might employ it to visualize complex concepts for students. The model's ability to interpret rough sketches makes it a versatile tool for anyone looking to bring their ideas to life with minimal effort.

Conclusion & Next Steps

ControlNet-Scribble represents a significant advancement in the field of AI-assisted creativity. By bridging the gap between rough sketches and detailed images, it opens up new possibilities for artists, designers, and educators. Future developments could include enhanced customization options and integration with other creative tools, further expanding its utility and accessibility.

- ControlNet-Scribble transforms rough sketches into detailed images.

- The model was trained on 50,000 scribble-image pairs.

- It is accessible to users with varying levels of technical expertise.

- Applications include concept art, education, and creative exploration.

ControlNet-Scribble is a specialized model within the Stable Diffusion ecosystem that transforms hand-drawn sketches into photorealistic images. This model leverages the power of ControlNet to interpret and enhance user-drawn scribbles, making it a valuable tool for artists and designers. By converting rough sketches into detailed images, it bridges the gap between initial concepts and final artwork.

Key Features of ControlNet-Scribble

ControlNet-Scribble stands out due to its ability to handle a wide range of scribble inputs, from rough doodles to more structured sketches. It supports high-resolution outputs, often comparable to professional tools like MidJourney. The model is particularly useful for generating images with correct anatomical details, such as hands, which are often challenging for other AI models. Additionally, it offers flexibility in artistic styles, allowing users to achieve various aesthetics.

High-Resolution Outputs

One of the standout features of ControlNet-Scribble is its ability to produce high-resolution images. This makes it suitable for professional applications where detail and clarity are paramount. Users have reported achieving results that rival those of premium tools, making it a cost-effective alternative for high-quality image generation.

Limitations and Challenges

Despite its strengths, ControlNet-Scribble has some limitations. The model may struggle with highly abstract or niche subjects, requiring more precise inputs to generate accurate results. Complex details, such as fine textures or overlapping elements, can also pose challenges. These limitations suggest areas for future improvement, such as expanding the training dataset or enhancing the model's ability to handle intricate inputs.

Handling Complex Structures

The model's performance with complex structures, like intricate textures or overlapping objects, can be inconsistent. Users may need to refine their sketches or provide additional guidance to achieve the desired outcome. This highlights the importance of understanding the model's capabilities and limitations when working on detailed projects.

Comparative Analysis with Other Models

ControlNet-Scribble is part of a broader ecosystem of ControlNet models, each designed for different condition inputs. For example, the ControlNet-Canny-Sdxl-1.0 model uses Canny edge detection and is noted for its aesthetic performance. In contrast, ControlNet-Scribble supports any type of lines, offering a more general and interactive approach. This comparison underscores the unique value of ControlNet-Scribble for users who prefer hand-drawn inputs.

User Experience and Community Feedback

Community feedback has been largely positive, with users praising the model's ability to generate impressive results from simple sketches. Platforms like Reddit feature examples of detailed images created with correct hand positions and backgrounds. However, some users note the need for precise sketches to achieve optimal results, emphasizing the importance of input quality.

Conclusion & Next Steps

ControlNet-Scribble is a powerful tool for transforming hand-drawn sketches into photorealistic images, offering flexibility and high-resolution outputs. While it has some limitations, its strengths make it a valuable asset for artists and designers. Future improvements could focus on enhancing its ability to handle complex inputs and expanding its training dataset to cover more niche subjects.

- ControlNet-Scribble excels in high-resolution image generation.

- The model supports a wide range of scribble inputs.

- Complex details and niche subjects can be challenging.

- Community feedback highlights both strengths and areas for improvement.

controlnet-scribble is an innovative AI tool designed to transform simple sketches into detailed artworks using Stable Diffusion and ControlNet. It allows users to input rough doodles and text prompts, which the model then processes to generate high-quality images. This tool is particularly useful for artists, designers, and hobbyists looking to quickly visualize their ideas.

How controlnet-scribble Works

The tool integrates Stable Diffusion, a powerful text-to-image model, with ControlNet, which adds conditional control to the generation process. Users start by drawing a basic sketch, which serves as a structural guide. The AI then interprets this sketch alongside a text prompt to produce a refined image. This combination ensures that the final output aligns with both the user's visual and descriptive inputs.

Key Features and Capabilities

controlnet-scribble supports various artistic styles and subjects, from realistic portraits to fantastical landscapes. It is particularly adept at maintaining the structural integrity of the original sketch while enhancing details. The tool is accessible via platforms like Hugging Face and Replicate, making it easy for users to experiment without needing advanced technical skills.

Applications and Use Cases

This tool has diverse applications, including concept art creation, educational illustrations, and rapid prototyping for designers. It can also be used for storytelling, where quick visualizations of scenes or characters are needed. The ability to turn rough ideas into polished images makes it a valuable asset in creative workflows.

Limitations and Challenges

While controlnet-scribble is powerful, it has some limitations. The quality of the output heavily depends on the clarity of the input sketch and the specificity of the text prompt. Additionally, the model may struggle with highly complex or abstract concepts, requiring users to refine their inputs for better results.

Future Potential and Developments

The open-source nature of controlnet-scribble encourages community contributions, which could lead to significant improvements. Future versions might include better handling of intricate details, integration with newer Stable Diffusion models like SDXL, and expanded training datasets to cover more artistic styles and subjects.

Conclusion

controlnet-scribble represents a major step forward in AI-assisted art creation, bridging the gap between rough sketches and finished artworks. Its combination of user-friendly interfaces and powerful AI capabilities makes it accessible to a wide range of users. Despite its current limitations, ongoing developments and community involvement promise an exciting future for this tool.

- Transforms sketches into detailed artworks using AI

- Integrates Stable Diffusion with ControlNet for enhanced control

- Supports various artistic styles and subjects

- Accessible via platforms like Hugging Face and Replicate

- Open-source nature fosters community-driven improvements

ControlNet Scribble is a powerful tool that enhances the capabilities of text-to-image diffusion models. By providing a simple scribble as input, users can guide the image generation process to produce more accurate and detailed results. This technique is particularly useful for artists and designers who want to maintain creative control while leveraging AI.

Understanding ControlNet Scribble

ControlNet Scribble works by integrating a conditional control mechanism into the diffusion model. This allows the model to interpret rough sketches or scribbles and generate high-quality images based on them. The process involves training the model to recognize and adhere to the structural outlines provided by the user, ensuring that the final output aligns with the intended design.

How It Differs from Traditional Methods

Traditional text-to-image models rely solely on textual prompts, which can sometimes lead to ambiguous or undesired results. ControlNet Scribble, on the other hand, combines textual prompts with visual scribbles, offering a more precise way to communicate the desired outcome. This dual-input approach significantly improves the model's ability to generate relevant and coherent images.

Applications of ControlNet Scribble

Conclusion & Next Steps

ControlNet Scribble represents a significant advancement in the field of AI-driven image generation. By bridging the gap between human creativity and machine learning, it opens up new possibilities for artists and designers. Future developments may include more intuitive interfaces and enhanced customization options, further empowering users to bring their visions to life.

- Explore pre-trained models for quick results

- Experiment with different scribble styles to see varied outputs

- Join community discussions to share tips and tricks