Key Points

By John Doe 5 min

Key Points

Research suggests sam-2-video, based on Meta's Segment Anything Model 2 (SAM 2), uses segmentation to isolate objects in videos, enabling users to create animations by manipulating these objects.

It seems likely that users can track objects with a single click and apply effects like erasing, gradients, or highlighting to animate them.

The evidence leans toward sam-2-video being a tool for interactive video editing, with real-time processing for professional-looking animations.

Introduction to sam-2-video

sam-2-video is a powerful tool derived from Meta's Segment Anything Model 2 (SAM 2), designed for segmenting and tracking objects in videos. This technology allows users to isolate any object with minimal interaction, such as a single click, and manipulate it to create various animations or effects.

How Segmentation Works

Segmentation in sam-2-video involves dividing the video into regions, where each region corresponds to a specific object. The process uses a transformer-based model with streaming memory for real-time video processing:

- Users provide a prompt, like clicking on an object in a frame.

- The model generates a segmentation mask for that object in the specified frame.

- It then tracks the object across all frames, updating the mask to account for movement and changes.

Creating Animations with Segmentation

Once the object is segmented and tracked, users can manipulate the masks to create animations:

- Erasing: Remove the object for effects like making it disappear.

- Applying Gradients: Add smooth color transitions for a polished look.

- Highlighting: Emphasize the object by altering its brightness or color.

- Position and Size Changes: Modify the object's position or size over time to create motion or morphing effects.

This interactivity makes sam-2-video suitable for video editing and augmented reality, offering real-time feedback for creating professional animations.

Survey Note: Detailed

The Segment Anything Model 2 (SAM 2) represents a significant leap in computer vision technology, particularly for video segmentation. Developed by Meta AI, this model allows users to interactively segment and track objects in videos with minimal input, such as a single click. The tool is designed to be user-friendly, making advanced segmentation accessible to a broader audience, including animators and content creators.

Overview and Background

SAM 2 was announced on July 29, 2024, and its research paper was published on January 22, 2025, at ICLR 2025. Building on the success of its predecessor, SAM, this model extends its capabilities to handle both images and videos with state-of-the-art performance. The model is promptable, meaning users can specify objects of interest through simple prompts like points, boxes, or masks. This functionality is particularly useful for animation, where precise object isolation is crucial for creative manipulation.

Technical Details of Segmentation in sam-2-video

SAM 2's architecture is based on a transformer with streaming memory, optimized for real-time video processing. This design includes efficiency improvements like using Hierarchical Vision Transformers (Hiera) instead of standard ViT-H, ensuring real-time inference speeds of approximately 44 frames per second on NVIDIA A100 GPUs. The segmentation process begins with user interaction, where a prompt such as a click on an object in a video frame generates a segmentation mask. The model then tracks the object across subsequent frames, updating the mask to handle challenges like occlusion, reappearance, and motion.

User Interaction and Prompting

Users can interact with the model by providing prompts like points, boxes, or masks to specify objects of interest. The model generates a segmentation mask for the object in the initial frame and uses streaming memory to track it across the video. This feature is particularly useful for animation, where objects need to be isolated and manipulated frame by frame.

Applications in Animation

The ability to segment and track objects in real-time makes SAM 2 a powerful tool for animation. Animators can isolate characters or objects with minimal effort, enabling creative effects and manipulations. The model's efficiency ensures that even complex scenes can be processed quickly, making it suitable for both amateur and professional animators.

Conclusion & Next Steps

SAM 2 represents a significant advancement in video segmentation, offering real-time performance and user-friendly interaction. Its applications in animation are vast, from simplifying object isolation to enabling creative effects. Future developments could further enhance its capabilities, such as integrating with other AI tools for more complex animations.

- Real-time segmentation at 44 FPS on NVIDIA A100 GPUs

- User-friendly prompting with points, boxes, or masks

- Efficient handling of object occlusion and motion

The sam-2-video model by Meta is a powerful tool for video segmentation and tracking, enabling users to isolate and manipulate objects in videos with high precision. This capability opens up new possibilities for creating animations by leveraging the model's segmentation masks and tracking features.

Understanding sam-2-video's Segmentation and Tracking

The sam-2-video model excels in generating segmentation masks for objects in videos, allowing users to isolate specific elements frame by frame. Its tracking feature ensures continuity, even in complex scenarios like crowded scenes or long occlusions. However, it may face challenges with objects that have thin details or similar appearances nearby.

Key Features of Segmentation

The segmentation masks produced by sam-2-video are highly accurate, enabling precise isolation of objects. These masks can be used for various effects, such as object removal or highlighting, which are essential for creating animations. The model's real-time processing makes it suitable for dynamic applications.

Leveraging Segmentation for Animation

The segmentation masks generated by sam-2-video serve as the foundation for creating animations. By manipulating these masks, users can produce effects like object removal, gradient transitions, and burst highlights. These tools are built into the model, allowing for interactive and real-time adjustments.

Practical Applications

The ability to change an object's position, size, or color over frames enables the creation of motion paths and morphing effects. This is particularly useful for video editing and augmented reality applications, where seamless integration of animated elements is crucial.

Practical Steps for Using sam-2-video for Animation

To create animations using sam-2-video, users can follow a straightforward process. First, upload a video to the demo platform. Then, use the interactive tools to segment and track objects. Finally, apply effects like erase, gradient, or burst to achieve the desired animation.

- Upload a video to the demo platform

- Segment and track objects using the interactive tools

- Apply effects like erase, gradient, or burst

- Export the final animation for use in projects

Conclusion & Next Steps

The sam-2-video model offers a robust solution for creating animations through advanced segmentation and tracking. Its real-time capabilities and interactive tools make it accessible for both beginners and professionals. Future developments may further enhance its animation potential.

SAM-2-Video is an advanced tool for video segmentation and tracking, leveraging the Segment Anything Model (SAM) to isolate and animate objects in videos. It allows users to select and track objects across frames, enabling creative effects like erasing, highlighting, or animating elements.

How SAM-2-Video Works

The tool uses a combination of point, box, and mask prompts to segment objects in videos. Once an object is selected, SAM-2-Video tracks it across frames using shared embeddings, ensuring consistency without inter-object communication. This makes it ideal for applications like video editing, gaming analysis, and augmented reality.

Step-by-Step Process

To use SAM-2-Video, users upload a video and select an object to segment. The model then generates masks for the object across all frames. These masks can be exported for further editing or used directly within the tool to apply effects like gradients or animations.

Challenges and Limitations

While powerful, SAM-2-Video may struggle with complex scenes, such as those with shot changes or crowded environments. Objects with thin details or similar appearances nearby can also pose challenges, requiring additional refinement for accurate segmentation.

Use Cases and Examples

SAM-2-Video has been used in various creative projects, from cinematic effects to game analysis. For instance, a Reddit user demonstrated its use in tracking Smash Bros. characters, highlighting their movements for animated overlays.

Conclusion & Next Steps

SAM-2-Video offers a versatile solution for video segmentation and animation, though it may require additional tools for complex scenarios. Future updates could address its limitations, making it even more robust for professional use.

- Supports point, box, and mask prompts

- Tracks objects across frames

- Ideal for video editing and AR

SAM 2 (Segment Anything Model 2) is an advanced AI model developed by Meta AI, designed for interactive segmentation in both images and videos. It builds upon the capabilities of the original SAM model, offering enhanced accuracy and efficiency in segmenting objects with minimal user input. The model is particularly useful for tasks like object removal, animation, and motion tracking in videos.

Key Features of SAM 2

SAM 2 introduces several improvements over its predecessor, including better segmentation accuracy and faster processing times. It can segment objects in videos with just a few clicks, making it highly interactive and user-friendly. The model is trained on a diverse dataset, enabling it to handle a wide range of objects and scenes effectively.

Video Segmentation Capabilities

One of the standout features of SAM 2 is its ability to segment objects in videos with minimal user interaction. Users can click on an object in a video, and the model will track and segment it across frames. This is particularly useful for creating animations, removing objects, or applying effects to specific elements in a video.

Applications in Animation

SAM 2's interactive segmentation makes it a powerful tool for animators. By isolating objects in videos, animators can manipulate them to create various effects. For example, they can erase objects, apply gradients, or highlight motion paths. The model's real-time processing capabilities ensure that these tasks can be performed efficiently.

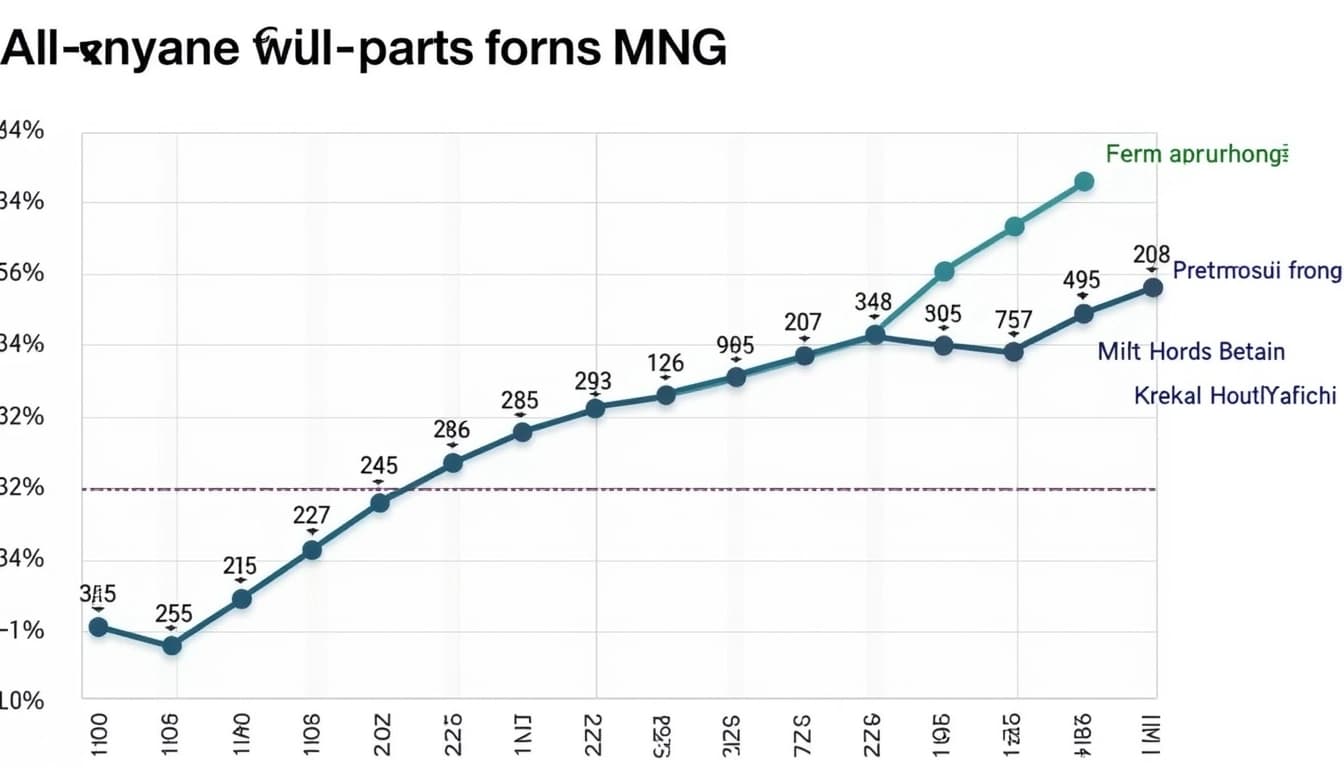

Comparative Analysis

Compared to previous models, SAM 2 offers significant improvements. In video segmentation, it achieves better accuracy with 3x fewer interactions than prior approaches. In image segmentation, it's 6x faster and more accurate than the original SAM. These advancements make it a valuable tool for both professionals and hobbyists.

Conclusion

SAM 2 represents a milestone in video and image segmentation, offering advanced capabilities with ease of use. Its interactive features and real-time processing make it accessible for a wide range of applications, from professional animation to casual video editing. While it has some limitations, its overall performance and versatility position it as a leading tool in the field.

- Improved accuracy in video segmentation

- Faster processing times

- Interactive and user-friendly

In diesem Artikel geht es um die verschiedenen Aspekte von Webdesign und wie es die Nutzererfahrung beeinflusst. Wir werden uns mit den Grundlagen befassen und einige fortgeschrittene Techniken besprechen.

Die Bedeutung von Webdesign

Webdesign ist nicht nur die visuelle Gestaltung einer Website, sondern auch die Struktur und Benutzerfreundlichkeit. Ein gutes Design kann die Verweildauer der Besucher erhöhen und die Conversion-Rate verbessern. Es ist wichtig, sowohl auf Ästhetik als auch auf Funktionalität zu achten.

Responsive Design

Responsive Design stellt sicher, dass eine Website auf allen Geräten gut aussieht und funktioniert. Mit der zunehmenden Nutzung von Mobilgeräten ist dies zu einem unverzichtbaren Bestandteil des Webdesigns geworden. Es gibt verschiedene Techniken, um dies zu erreichen, wie zum Beispiel flexible Grids und Media Queries.

Farben und Typografie

Zukunft des Webdesigns

Die Zukunft des Webdesigns wird von neuen Technologien wie KI und VR geprägt sein. Diese Entwicklungen werden die Art und Weise, wie wir Websites gestalten und nutzen, grundlegend verändern. Es ist wichtig, sich auf diese Veränderungen vorzubereiten und kontinuierlich zu lernen.

- Responsive Design

- Benutzerfreundlichkeit

- Visuelle Ästhetik