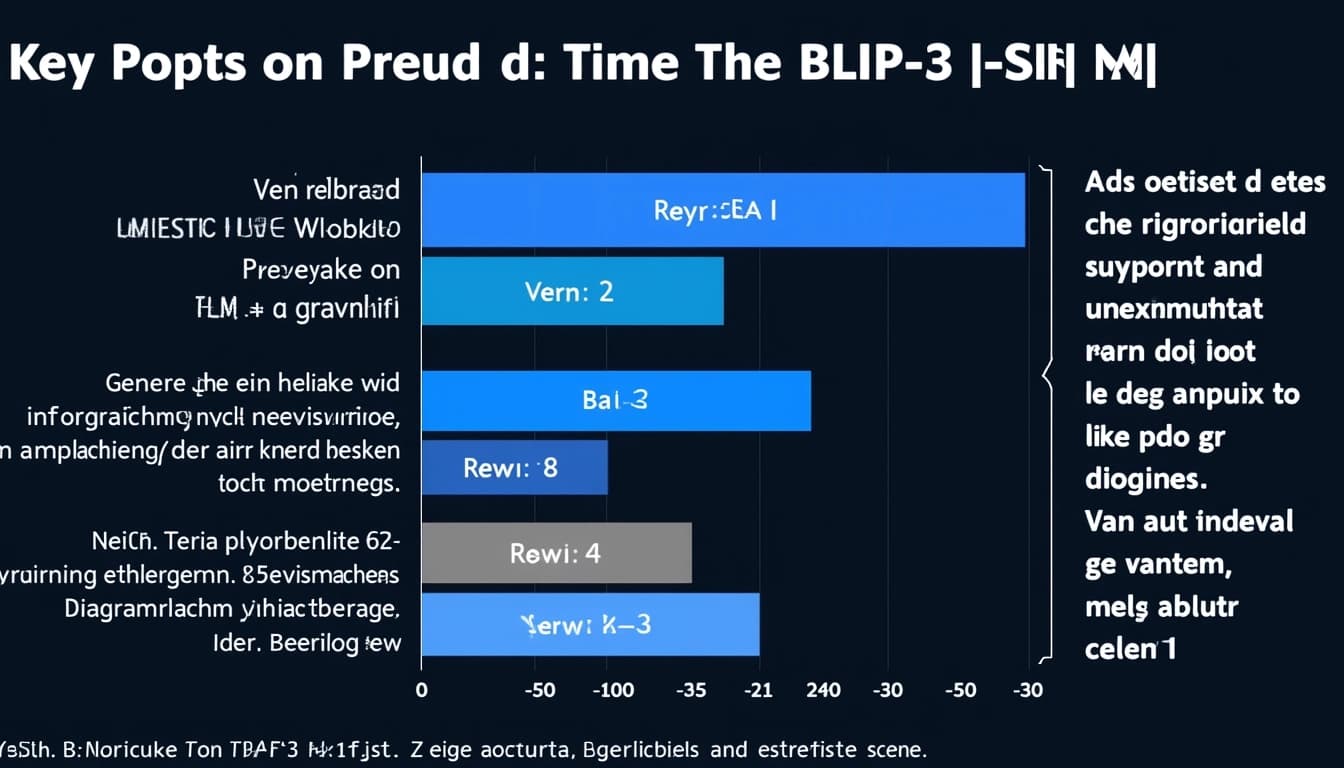

Key Points on BLIP-3 (xGen-MM)

By John Doe 5 min

Key Points

- Research suggests BLIP-3, also known as xGen-MM, builds on BLIP and BLIP-2 with enhanced features for vision-language tasks.

- It seems likely that BLIP-3 improves scalability with a perceiver resampler, replacing BLIP-2's Querying Transformer.

- The evidence leans toward BLIP-3 using diverse, large-scale datasets like MINT-1T and supporting complex, multi-image inputs.

- It appears BLIP-3 simplifies training with a single auto-regressive loss and includes safety features to reduce hallucinations.

Overview of BLIP-3

BLIP-3, or xGen-MM, is a large multimodal model developed by Sales force Research, designed to handle tasks involving images and text, such as visual question answering and image captioning. It builds on the BLIP series, which started with BLIP and was followed by BLIP-2, each advancing vision-language pre-training.

Improvements Over Predecessors

BLIP-3 introduces several enhancements compared to BLIP and BLIP-2, focusing on scalability, efficiency, and versatility:

- Model Architecture: BLIP-3 replaces the Querying Transformer (Q-Former) used in BLIP-2 with a perceiver resampler, allowing for efficient handling of any-resolution image inputs. This change improves scalability, especially for high-resolution or multiple images.

- Training Data: It uses a diverse set of datasets, including MINT-1T, OBELICS, BLIP3-KALE, BLIP3-OCR-200M, and BLIP3-GROUNDING-50M, totaling ~100B multimodal tokens, offering richer and more varied data compared to BLIP-2's 129 million image-text pairs.

- Training Objectives: BLIP-3 simplifies training by using a single auto-regressive loss for text tokens in a multimodal context, moving away from BLIP-2's multiple losses (ITM, ITC, ITG).

- Input Capabilities: Unlike BLIP-2, which was limited to single-image inputs, BLIP-3 supports free-form multimodal interleaved texts and vision tokens, enabling complex, multi-image tasks.

- Performance and Safety: BLIP-3 shows improved performance in vision-language tasks and includes safety features to reduce hallucinations.

BLIP-3 (Gen-MM) represents a significant evolution in the field of open large multimodal models, building upon the foundations laid by BLIP-2. The new model introduces several key improvements, including a more scalable architecture, richer training data, and a streamlined training process. These advancements aim to address some of the limitations observed in BLIP-2, particularly in handling high-resolution images and diverse multimodal tasks.

Model Architecture: A Scalable Leap Forward

One of the most significant changes in BLIP-3 is the replacement of BLIP-2's Q-Former with a perceiver resampler for vision token sampling. The Q-Former in BLIP-2 was a lightweight module that generated query tokens to interact with the language model, but it posed scalability challenges for variable-sized inputs. BLIP-3 addresses this by implementing a perceiver resampler, inspired by models like Flamingo, which downsamples vision tokens efficiently.

Architecture Details

The architecture consists of a Vision Transformer (ViT) for image encoding, with options like SigLIP for improved visual representations, particularly beneficial for OCR tasks. A perceiver resampler handles any-resolution image inputs through patch-wise encoding, concatenating patch encodings with downsized original images for global information, and reducing sequence length by a factor of five or more depending on query tokens.

Training Data: Richness and Diversity

BLIP-3's training data is notably more comprehensive than BLIP-2's. While BLIP-2 was pre-trained on 129 million image-text pairs from the web, BLIP-3 leverages ~100B multimodal tokens from a diverse mix of datasets. This includes MINT-1T, a large-scale dataset for multimodal pre-training, and OBELICS, likely focused on object-centric tasks.

Conclusion & Next Steps

BLIP-3 represents a major step forward in the development of open multimodal models. Its scalable architecture and rich training data make it a versatile tool for a wide range of applications. Future work may focus on further optimizing the training process and expanding the model's capabilities to handle even more complex multimodal tasks.

- Scalable architecture with perceiver resampler

- Rich and diverse training data

- Improved handling of high-resolution images

BLIP-3 represents a significant evolution from its predecessor, BLIP-2, particularly in terms of dataset diversity, training objectives, and input capabilities. The model leverages a more varied dataset, including specialized subsets like BLIP3-KALE and BLIP3-OCR-200M, which enhance its performance across a wide range of tasks. This diversity contrasts with BLIP-2's more uniform web-sourced data, potentially leading to better generalization and performance across varied scenarios.

Training Objectives: Simplification for Efficiency

BLIP-2 employed a complex suite of losses, including Image-Text Matching (ITM), Image-Text Contrastive (ITC), and Image-Text Generation (ITG), which required balancing multiple objectives during training. BLIP-3 simplifies this by adopting a single auto-regressive loss for text tokens in a multimodal context, akin to large language model training but extended to include vision tokens. This unified approach streamlines the training process, potentially improving model coherence and reducing computational overhead.

Input Capabilities: Handling Complexity

A key advancement in BLIP-3 is its ability to process free-form multimodal interleaved texts and vision tokens, not limited to single-image inputs as in BLIP-2. This capability enables BLIP-3 to handle sequences where text and image information are mixed in any order, such as multiple images with interspersed textual queries. This is particularly useful for applications like visual storytelling or multi-image question answering, expanding its utility beyond BLIP-2's scope.

Performance: Competitive and Versatile

BLIP-3's performance is evaluated across a range of tasks, including both single and multi-image benchmarks. It exhibits strong in-context learning capabilities, making it a versatile tool for various applications. The model's ability to handle complex inputs and its simplified training objectives contribute to its competitive edge over BLIP-2.

Conclusion & Next Steps

BLIP-3's advancements in dataset diversity, training efficiency, and input handling make it a robust and flexible model for multimodal tasks. Future developments could focus on further optimizing the model's performance and expanding its applicability to even more complex scenarios. The streamlined training process and enhanced input capabilities position BLIP-3 as a significant step forward in the field of multimodal AI.

- BLIP-3 leverages diverse datasets for better generalization.

- Simplified training objectives improve efficiency and coherence.

- Enhanced input capabilities enable handling of complex multimodal sequences.

BLIP-3, also known as xGen-MM, is a significant advancement in vision-language models, building upon the foundation laid by BLIP-2. It introduces several key improvements, including a more efficient architecture and enhanced multimodal capabilities. The model is designed to handle complex tasks involving both images and text, making it a versatile tool for various applications.

Key Innovations in BLIP-3

One of the standout features of BLIP-3 is its improved vision-language connector, which replaces the Q-Former used in BLIP-2 with a more efficient design. This change allows for better alignment between visual and textual data, leading to superior performance in tasks like image captioning and visual question answering. Additionally, BLIP-3 leverages a larger and more diverse dataset, enhancing its ability to generalize across different domains.

Enhanced Multimodal Capabilities

BLIP-3 excels in handling multi-image scenarios, a feature that was limited in BLIP-2. This capability is particularly useful for applications requiring the analysis of multiple images, such as medical imaging or autonomous driving. The model's ability to process and correlate information from multiple images sets it apart from its predecessors.

Performance and Benchmarks

BLIP-3 demonstrates competitive performance among open-source large multimodal models (LMMs) of similar sizes. It outperforms MM1-3B on benchmarks like TextCaps, TextVQA, and VQA-v2, showcasing its superior understanding and generation capabilities. These improvements are attributed to the model's refined architecture and the use of high-quality training data.

Safety and Ethical Considerations

BLIP-3 introduces a safety-tuned model using Direct Preference Optimization (DPO) to mitigate hallucinations—generating content not grounded in the input. This feature significantly reduces the VLGuard Attack Success Rate (ASR) from 56.6 to 5.2, ensuring safer and more reliable interactions. This focus on ethical deployment is a critical advancement over BLIP-2.

Conclusion & Next Steps

BLIP-3 represents a major leap forward in vision-language models, offering enhanced performance, safety, and versatility. Its open-source nature encourages community involvement and further innovation. Future work may focus on expanding its capabilities to even more complex multimodal tasks and improving its efficiency for real-time applications.

- Improved vision-language connector

- Enhanced multimodal capabilities

- Superior performance on benchmarks

- Safety-tuned model with DPO

- Open-source contribution

BLIP-3, also known as xGen-MM, represents a significant advancement in the BLIP series of vision-language models. It builds upon the successes of its predecessors while introducing several key innovations to improve scalability, performance, and safety. The model is designed to handle complex multimodal tasks more efficiently, making it a robust tool for both research and practical applications.

Key Innovations in BLIP-3

One of the most notable improvements in BLIP-3 is the replacement of the Q-Former with a perceiver resampler. This change allows for scalable vision token sampling, significantly reducing the sequence length by a factor of five or more. Additionally, BLIP-3 simplifies the training process by using a single auto-regressive loss for text tokens in a multimodal context, unlike BLIP-2, which employed multiple losses.

Enhanced Input Capabilities

BLIP-3 supports free-form multimodal interleaved texts and vision tokens, including multi-image inputs. This flexibility makes it more versatile compared to BLIP-2, which was limited to single-image inputs. The ability to handle any-resolution vision token sampling further enhances its scalability and efficiency.

Training and Performance

BLIP-3 was pre-trained on approximately 100 billion multimodal tokens from diverse datasets, including MINT-1T. This extensive training data contributes to its strong performance across various vision-language tasks. The model also includes safety features, such as a safety-tuned version with DPO to mitigate hallucinations, addressing a critical concern in multimodal AI.

Conclusion & Next Steps

BLIP-3 marks a significant step forward in the evolution of vision-language models. Its focus on scalability, safety, and versatility positions it as a valuable tool for future research and applications. The model's open-source nature further encourages community involvement and innovation in the field of multimodal AI.

- Scalable vision token sampling with perceiver resampler

- Simplified training objectives with auto-regressive loss

- Support for multi-image and free-form multimodal inputs

- Safety features to mitigate hallucinations

The paper 'BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models' introduces an innovative approach to multimodal learning. It focuses on leveraging frozen pre-trained image encoders and large language models to enhance performance in vision-language tasks.

Key Contributions of BLIP-2

BLIP-2 presents a novel framework that efficiently bridges the gap between vision and language models. By keeping the image encoder and language model frozen, it reduces computational costs while maintaining high performance. The method achieves state-of-the-art results on various benchmarks.

Architecture Overview

The architecture of BLIP-2 consists of a frozen image encoder and a frozen large language model, connected by a lightweight querying transformer. This design allows for efficient training and scalability, making it suitable for large-scale applications.

Performance and Results

Future Directions

The paper suggests several future research directions, including extending the framework to other modalities and improving the efficiency of the querying transformer. These advancements could further enhance the capabilities of multimodal models.

- Frozen image encoders reduce computational costs

- Lightweight querying transformer bridges vision and language

- State-of-the-art performance on multiple benchmarks