Key Points on Robust Video Matting

By John Doe 5 min

Key Points on Robust Video Matting

Research suggests robust_video_matting can create high-quality, studio-ready content by automating video matting.

It seems likely that this tool, using deep learning, separates foreground from background efficiently, ideal for professional video editing.

The evidence leans toward it being user-friendly, with options like a Colab demo for beginners and local installation for advanced users.

An unexpected detail is its real-time processing capability, handling 4K at 76 FPS and HD at 104 FPS on suitable hardware.

Introduction to Video Matting and Its Importance

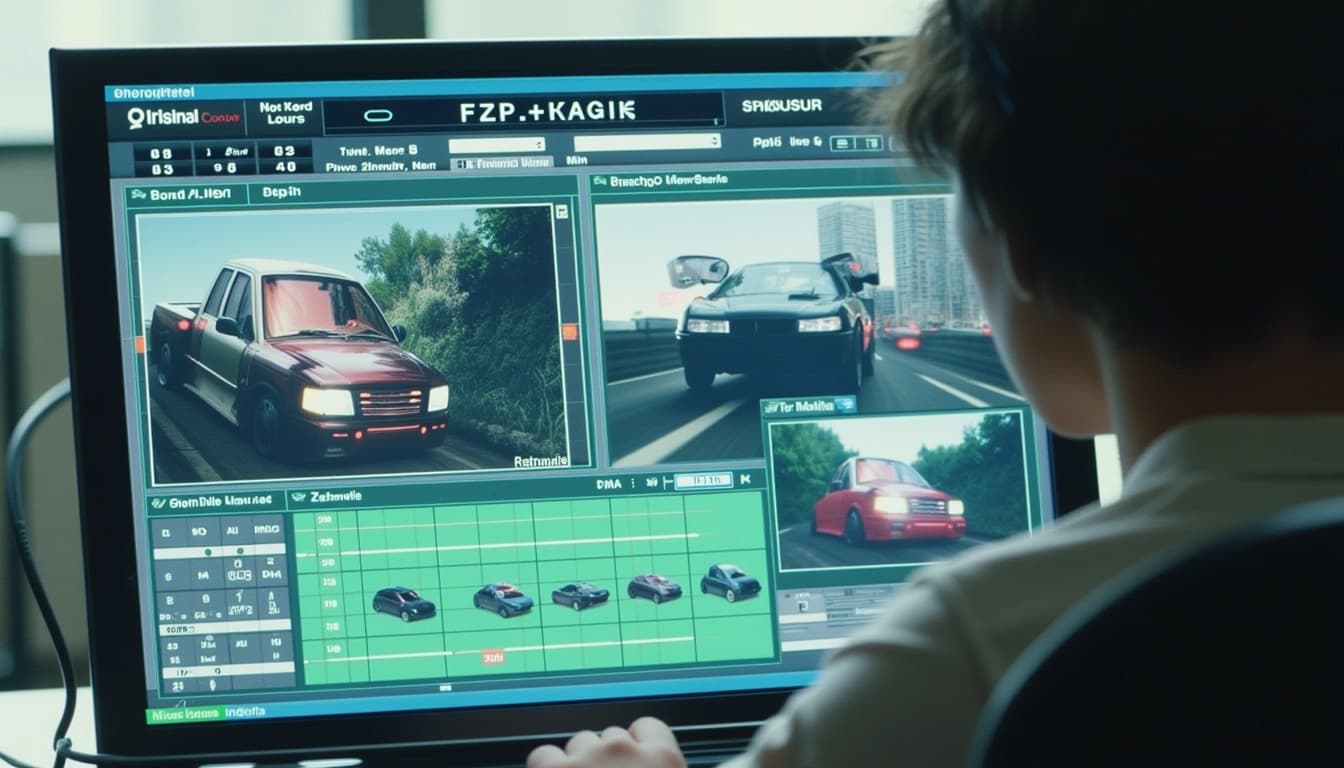

Video matting is the process of separating the foreground (e.g., a person) from the background in a video, generating an alpha matte to control layer mixing. This technique is crucial for creating studio-ready content, such as replacing backgrounds for professional broadcasts or compositing elements for high-end productions. Traditionally, manual matting can be labor-intensive, but tools like robust_video_matting have revolutionized this process by automating it with deep learning.

What Is Robust_Video_Matting?

Robust Video Matting, developed by PeterL1n and his team, is a deep learning-based tool specifically designed for human video matting. Unlike traditional methods that process each frame independently, it uses a recurrent neural network to exploit temporal information, ensuring better coherence across frames. Key features include:

- Real-time processing, with 4K at 76 FPS and HD at 104 FPS on an Nvidia GTX 1080Ti GPU.

- No need for additional inputs like trimaps or pre-captured backgrounds, making it versatile.

- Availability in multiple frameworks (PyTorch, TensorFlow, TensorFlow.js, ONNX, CoreML) for broad accessibility.

The tool is trained on both matting and segmentation datasets, enhancing its robustness across various scenarios.

How to Use Robust_Video_Matting for Studio-Ready Content

You can use robust_video_matting in two main ways, catering to different

Creating studio-ready content has never been easier with the advent of advanced video matting tools like Robust_Video_Matting. This technology allows users to separate foreground elements from the background with high precision, making it ideal for professional video production. Whether you're a content creator, filmmaker, or broadcaster, this tool can significantly enhance your workflow.

Understanding Robust_Video_Matting

Robust_Video_Matting is a state-of-the-art tool designed to handle video matting tasks efficiently. It leverages deep learning models to accurately distinguish between foreground and background elements, even in complex scenes. The tool supports various models, including mobilenetv3 and resnet50, catering to different needs in terms of speed and accuracy.

Key Features of Robust_Video_Matting

One of the standout features of Robust_Video_Matting is its ability to process videos in real-time, making it suitable for live broadcasts. Additionally, it offers high-quality alpha mattes, which are essential for seamless compositing. The tool also supports batch processing, allowing users to handle multiple videos simultaneously.

Getting Started with Robust_Video_Matting

To begin using Robust_Video_Matting, users can choose between the Colab demo for quick testing or local installation for more control. The Colab demo is perfect for beginners, providing a user-friendly interface to upload and process videos. On the other hand, local installation offers advanced customization options for professionals.

Best Practices for Optimal Results

Achieving the best results with Robust_Video_Matting requires attention to detail. Proper lighting and stable camera setups are crucial for accurate foreground separation. Users should also experiment with different models and settings to find the perfect balance between speed and quality for their specific needs.

Conclusion & Next Steps

Robust_Video_Matting is a powerful tool that can revolutionize your video production process. By following the tips and best practices outlined in this article, you can create studio-ready content with ease. Explore the tool further and experiment with its features to unlock its full potential.

- Ensure good lighting for accurate matting

- Use a stable camera to reduce motion blur

- Choose the right model (mobilenetv3 or resnet50) based on your needs

- Adjust the downsample ratio for optimal performance

Video matting has traditionally been a complex process, requiring specialized equipment like green screens and significant post-production effort. The advent of deep learning has introduced tools like robust_video_matting, which automate this process, making high-quality results accessible to a broader audience.

Technical Overview of Robust Video Matting

Robust Video Matting, developed by PeterL1n and his team, is a state-of-the-art solution for human video matting, leveraging a recurrent neural network to process videos with temporal memory. This approach ensures temporal coherence, a critical factor for video quality, by considering frame sequences rather than treating each frame independently. The tool's architecture uses a convolutional GRU (ConvGRU) to maintain and update states across frames, enhancing consistency.

Performance and Efficiency

This method achieves real-time processing, with benchmarks showing 4K at 76 FPS and HD at 104 FPS on an Nvidia GTX 1080Ti GPU. The model is lighter than previous approaches, with 58% fewer parameters compared to MODNet, and supports multiple frameworks, including PyTorch, TensorFlow, TensorFlow.js, ONNX, and CoreML.

Training and Datasets

The tool is trained on a combination of matting datasets, such as VideoMatte240K, Distinctions-646, and Adobe Image Matting, alongside segmentation datasets like YouTubeVIS, COCO, and SPD. This dual training strategy improves robustness, handling diverse human appearances and backgrounds effectively without requiring auxiliary inputs like trimaps or pre-captured backgrounds.

Applications and Accessibility

Robust Video Matting simplifies the process of creating high-quality video mattes, making it accessible to content creators, filmmakers, and developers. Its ability to handle real-time processing and diverse backgrounds opens up new possibilities for creative applications, from live streaming to post-production.

Conclusion & Next Steps

Robust Video Matting represents a significant advancement in video matting technology, combining efficiency, quality, and ease of use. As the tool continues to evolve, future developments may include enhanced support for mobile devices and integration with more platforms. For those interested in exploring this technology further, the GitHub repository provides comprehensive documentation and examples.

- Explore the GitHub repository for implementation details

- Experiment with different datasets to improve model performance

- Integrate the tool into your video processing pipeline

Robust_video_matting is an advanced AI tool designed for high-quality video matting, enabling precise foreground extraction from videos. It leverages deep learning to separate subjects from their backgrounds with remarkable accuracy, making it invaluable for video editing and production. The tool supports various platforms, including PyTorch, TensorFlow, and ONNX, ensuring flexibility for developers and content creators.

Key Features and Capabilities

Robust_video_matting excels in real-time performance, handling high-resolution videos with minimal latency. Its ability to maintain temporal consistency across frames sets it apart from traditional matting methods. The tool also offers pre-trained models, such as mobilenetv3 and resnet50, catering to different performance and quality requirements.

Multi-Platform Support

One of the standout features of Robust_video_matting is its compatibility with multiple platforms. Whether you're working in PyTorch, TensorFlow, or ONNX, the tool provides seamless integration. This cross-platform support ensures that users can leverage its capabilities regardless of their preferred development environment.

Usage and Accessibility

Robust_video_matting is designed to be accessible to both beginners and advanced users. The Colab demo offers a user-friendly interface for quick testing, while local installation provides more control for those with the necessary hardware. The tool's documentation is comprehensive, guiding users through setup and usage with clear instructions.

Conclusion and Next Steps

Robust_video_matting is a powerful tool for anyone involved in video production or editing. Its advanced features, combined with ease of use, make it a valuable addition to any workflow. For those interested in exploring further, the GitHub repository offers extensive resources and community support.

- Explore the Colab demo for a quick start

- Download pre-trained models for local use

- Join the community for support and updates

Robust Video Matting (RVM) is a cutting-edge tool designed for high-quality video matting, enabling users to extract foreground elements with precision. It leverages deep learning to separate subjects from backgrounds, making it ideal for professional video editing and studio applications. The tool is optimized for both speed and accuracy, ensuring smooth performance even with high-resolution footage.

Installation and Setup

Setting up RVM involves installing Python and PyTorch, followed by cloning the repository from GitHub. Users need to ensure their system meets the hardware requirements, particularly GPU compatibility for optimal performance. The installation process is straightforward but requires attention to detail, especially when configuring dependencies like Torch and Torchvision.

Windows-Specific Installation

For Windows users, additional steps may be necessary, such as configuring environment variables and ensuring CUDA support is enabled. Tutorials are available to guide users through the process, including video walkthroughs that demonstrate each step clearly. Proper installation is crucial to avoid runtime errors and ensure seamless operation.

Best Practices for Studio-Ready Results

To achieve professional-grade results, it's essential to follow best practices such as maintaining good lighting and stable camera conditions. These factors significantly impact the model's ability to distinguish foreground from background accurately. Additionally, selecting the right model variant, like Mobilenetv3 for speed or Resnet50 for higher accuracy, can enhance output quality.

Performance Metrics and Comparisons

RVM boasts impressive performance metrics, including 76 FPS for 4K and 104 FPS for HD on an Nvidia GTX 1080Ti GPU. These benchmarks highlight its efficiency compared to traditional frame-by-frame methods. The tool's recurrent architecture ensures temporal coherence, reducing flickering and improving overall video quality.

Limitations and Considerations

While RVM excels in human video matting, its performance may vary with non-human subjects. Users should also be mindful of hardware limitations, as older GPUs might not support all features. Understanding these constraints helps in setting realistic expectations and planning workflows accordingly.

Conclusion & Next Steps

Robust Video Matting is a powerful tool for professionals seeking high-quality video matting solutions. By following installation guides and best practices, users can leverage its full potential. Future updates may expand its capabilities, making it even more versatile for various applications.

- Ensure proper lighting for optimal results

- Choose the right model variant based on needs

- Monitor hardware compatibility for smooth operation

Robust_video_matting is a cutting-edge tool designed for high-quality video matting, enabling users to extract foreground objects from videos with precision. This technology leverages advanced algorithms to separate subjects from backgrounds, making it ideal for content creators and video editors. The tool supports various platforms including PyTorch, TensorFlow, and ONNX, ensuring flexibility and compatibility.

Key Features of Robust_video_matting

Robust_video_matting offers real-time processing capabilities, allowing users to achieve studio-quality results without extensive manual effort. The tool includes pre-trained models that simplify the matting process, making it accessible even for beginners. Additionally, it supports high-resolution video inputs, ensuring that the output maintains the original quality.

Real-Time Processing

One of the standout features of Robust_video_matting is its ability to process videos in real-time. This is particularly useful for live streaming or quick edits where time is of the essence. The tool optimizes performance by utilizing GPU acceleration, ensuring smooth and efficient processing.

Installation and Usage

Installing Robust_video_matting is straightforward, with detailed instructions provided in the GitHub repository. Users can choose between local installation or using the Colab demo for quick testing. The tool's intuitive interface and comprehensive documentation make it easy to integrate into existing workflows.

Performance and Compatibility

Robust_video_matting is designed to work seamlessly across different hardware configurations, from high-end GPUs to more modest setups. The tool's performance metrics, such as FPS rates, demonstrate its efficiency even with demanding video inputs. Compatibility with multiple frameworks ensures that users can leverage their preferred environment.

GPU Requirements

To achieve optimal performance, Robust_video_matting requires a GPU with sufficient memory. The high FPS rates observed on GTX 1080Ti highlight the tool's ability to handle intensive tasks. Users should ensure their hardware meets the recommended specifications for the best experience.

Conclusion

Robust_video_matting represents a significant advancement in video matting technology, offering both quality and efficiency. Its real-time processing and accessibility through various platforms make it a versatile tool for content creators. By following best practices, users can maximize the tool's potential and produce professional-grade videos.

- Real-time video matting

- High-resolution support

- Multi-platform compatibility