Prompt Interpolation in Motion: Using natersaw/stable-diffusion-videos

By John Doe 5 min

Key Points

Research suggests that "Prompt Interpolation in Motion: Using natersaw/stable-diffusion-videos" involves creating videos by smoothly transitioning between two text prompts in Stable Diffusion, a popular AI image generation model.

It seems likely that the process interpolates text embeddings and seeds to generate a sequence of images, which are then combined into a video, though smoothness may vary.

The evidence leans toward this method being creative but not always producing perfectly smooth videos, offering a unique way to explore AI-generated content transitions.

What is Prompt Interpolation in Motion?

Overview

Prompt interpolation in motion refers to the technique of generating a video by gradually morphing between two text prompts using Stable Diffusion. This process creates a sequence of images that transition smoothly from one concept (e.g., "a cat") to another (e.g., "a dog"), which are then stitched together to form a video.

How It Works

Stable Diffusion converts text prompts into numerical representations called embeddings. The "natersaw/stable-diffusion-videos" repository, available at [GitHub](https://github.com/nateraw/stable-diffusion-videos), implements this by:

- Interpolating the text embeddings linearly between two prompts to create intermediate states.

- Also interpolating the random seeds used for noise generation, which helps maintain some consistency across frames.

- Generating an image for each interpolated step using these embeddings and seeds, then combining them into a video.

This method allows for creative exploration, such as morphing between objects or styles, but may not always result in perfectly smooth transitions due to the independent generation of each frame.

Unexpected Detail

An interesting aspect is that while the repository interpolates seeds, this doesn't guarantee smooth noise transitions, as seeds are discrete and their interpolation might not correlate well with visual smoothness.

Stable Diffusion, released in 2022 by Stability AI, is a deep learning text-to-image model that generates high-quality images from text descriptions using diffusion techniques. It operates by refining random noise into an image guided by a text prompt, leveraging components like a text encoder and a diffusion model. This model is particularly notable for its ability to run on consumer hardware, making it accessible for creative applications.

Introduction to Stable Diffusion and Prompt Interpolation

Prompt interpolation involves creating a sequence of text embeddings that transition smoothly from one prompt to another, enabling the generation of a video that morphs between concepts. The repository 'natersaw/stable-diffusion-videos' builds on this idea to produce videos by exploring the latent space and morphing between text prompts. This approach was inspired by a script shared by AI researcher Andrej Karpathy.

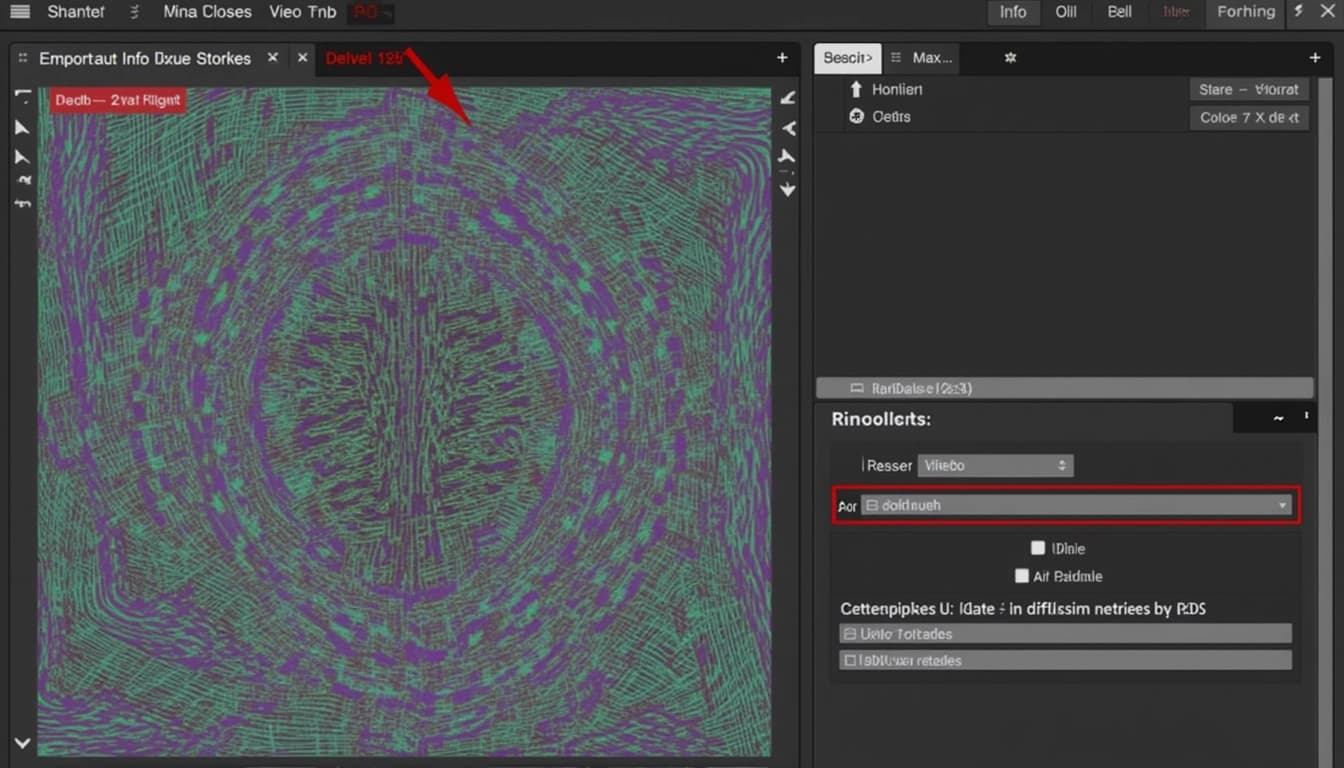

Detailed Mechanism of natersaw/stable-diffusion-videos

The repository provides a StableDiffusionWalkPipeline with a walk method that facilitates video creation. The process involves text embeddings interpolation and seed interpolation. Text prompts are first encoded into embeddings using Stable Diffusion's text encoder. For two prompts, say 'a cat' and 'a dog,' the embeddings E_A and E_B are interpolated linearly. For N interpolation steps, intermediate embeddings E_i are calculated as E_i = E_A + (i/N) * (E_B - E_A) for i from 0 to N. This creates a sequence of embeddings representing a blend between the two prompts.

Challenges and Considerations

While prompt interpolation can produce visually compelling results, it is not without challenges. The interpolation may sometimes lead to artifacts or inconsistencies in the generated images, especially when the prompts describe vastly different concepts. Additionally, the smoothness of the transition depends heavily on the quality of the embeddings and the interpolation method used.

Conclusion & Next Steps

Stable Diffusion and prompt interpolation offer exciting possibilities for creative applications, from art generation to video production. Future improvements could focus on enhancing the smoothness of transitions and reducing artifacts. Experimenting with different interpolation methods and prompt combinations can yield even more impressive results.

- Explore different text prompts for interpolation

- Experiment with non-linear interpolation methods

- Optimize the pipeline for smoother video output

The 'natersaw/stable-diffusion-videos' repository on GitHub enables users to create videos by interpolating between text prompts using Stable Diffusion. This approach leverages the model's ability to generate images from text, allowing for smooth transitions between different concepts or scenes. The repository provides a straightforward way to produce creative content without requiring deep technical expertise.

How It Works: Interpolation and Seed Handling

The repository interpolates between two text prompts by generating intermediate embeddings, which are then fed into Stable Diffusion to produce a sequence of images. These embeddings are created by blending the text encodings of the prompts, ensuring a gradual transition. Additionally, the tool interpolates between two seeds to initialize the random noise for the diffusion process, though this method has limitations in ensuring smooth noise transitions.

Technical Details of Embedding Interpolation

The interpolation of text embeddings occurs in a high-dimensional latent space, where each dimension represents learned features. This space allows for smooth transitions between prompts, as the model can blend features effectively. However, the repository's use of different seeds for each frame, instead of a fixed seed, may introduce discontinuities in the generated video.

Challenges and Limitations

One major challenge is the potential for jarring transitions due to the independent generation of each frame. While interpolating embeddings helps, the use of varying seeds can disrupt smoothness. Alternative approaches, such as using a fixed seed and varying only the prompt, might yield better results but are not implemented in this repository.

Practical Applications and Customization

The repository allows users to customize video generation by adjusting parameters like height, width, guidance scale, and the number of inference steps. This flexibility makes it suitable for various creative projects, from artistic animations to educational content. The tool's ease of use makes it accessible to a broad audience.

Conclusion and Future Improvements

The 'natersaw/stable-diffusion-videos' repository offers a promising way to create videos using Stable Diffusion, though it has room for improvement. Future versions could explore fixed-seed approaches or more advanced interpolation techniques to enhance video smoothness. Despite its limitations, the tool is a valuable resource for creative experimentation.

- Interpolates text prompts for smooth transitions

- Uses seed interpolation for variation

- Customizable parameters for flexibility

- Potential for improved smoothness with fixed seeds

The repository 'natersaw/stable-diffusion-videos' offers a unique approach to video generation using Stable Diffusion, focusing on interpolating between text prompts to create smooth transitions. This method leverages the power of Stable Diffusion's latent space to morph between different concepts, such as turning a cat into a dog or blending artistic styles. The process involves generating images at intermediate steps between two prompts and stitching them together into a video, providing a creative way to visualize AI-generated transformations.

Technical Implementation

The core functionality of 'natersaw/stable-diffusion-videos' is built around the interpolation of text embeddings in Stable Diffusion's latent space. By calculating intermediate embeddings between two prompts, the tool generates a sequence of images that transition smoothly from one concept to another. These images are then compiled into a video, with options to adjust the frame rate and interpolation steps for smoother or more abrupt transitions. The repository provides both a Python API and a command-line interface, making it accessible for users with varying levels of technical expertise.

Key Features

One of the standout features of this tool is its ability to interpolate not just between simple objects but also between complex scenes or styles. For example, users can morph a 'sunset over a beach' into a 'night sky with stars,' exploring the creative possibilities of AI-generated art. The tool also supports audio-driven interpolation, where the pace of the transition can be synchronized with music or other audio inputs, adding another layer of creativity to the videos.

Use Cases and Examples

The repository includes several examples demonstrating its capabilities, such as transforming a cat into a dog or blending different artistic styles. These examples are available in the provided Colab notebook, allowing users to experiment with the tool without needing to set up a local environment. Potential use cases range from creating artistic animations for music videos to generating educational content that visually explains complex concepts through gradual transformations.

Limitations and Future Directions

While 'natersaw/stable-diffusion-videos' offers a creative approach to video generation, it has some limitations. The transitions may not always be perfectly smooth, especially when interpolating between vastly different concepts. Additionally, the computational cost can be high, particularly for longer videos or higher resolutions. Future enhancements could include more advanced interpolation techniques, better handling of complex prompts, and optimizations to reduce computational requirements.

Conclusion & Next Steps

The 'natersaw/stable-diffusion-videos' repository is a fascinating exploration of Stable Diffusion's potential beyond static image generation. By enabling video creation through text prompt interpolation, it opens up new possibilities for creative expression and AI-driven art. Users interested in experimenting with this tool can start with the provided Colab notebook or explore the Python API for more customized workflows. As the field of AI-generated content continues to evolve, tools like this will likely play a significant role in shaping the future of digital art and media.

- Experiment with different text prompts to explore creative possibilities.

- Adjust interpolation steps and frame rates for smoother transitions.

- Consider using audio-driven interpolation for synchronized video effects.

Stable Diffusion Videos is a tool that enables the creation of videos by interpolating between prompts in the latent space of Stable Diffusion models. This approach allows for smooth transitions between different text prompts, generating unique and creative video content. The tool leverages the power of Stable Diffusion, a popular text-to-image model, to produce dynamic visual narratives.

How Stable Diffusion Videos Work

The process involves generating a sequence of images by interpolating between two or more text prompts in the latent space of the Stable Diffusion model. Each frame is generated based on a weighted combination of the prompts, creating a smooth transition from one image to another. This technique can produce videos with varying styles, themes, and visual effects, depending on the input prompts and parameters.

Latent Space Interpolation

Latent space interpolation is the core mechanism behind Stable Diffusion Videos. By navigating the latent space, the tool can blend different concepts and styles seamlessly. This method ensures that the transitions between frames are coherent and visually appealing, making it ideal for artistic and professional applications.

Applications and Use Cases

Stable Diffusion Videos can be used for a variety of purposes, including music videos, art projects, marketing content, and educational materials. The ability to generate custom videos from text prompts opens up endless possibilities for creativity and storytelling. Users can experiment with different prompts and settings to achieve their desired visual outcomes.

Limitations and Future Improvements

While Stable Diffusion Videos offers impressive capabilities, it has some limitations. The quality of the videos depends on the prompts and the interpolation settings, which may require fine-tuning. Future improvements could include better smoothness, integration with advanced video diffusion models, and more intuitive controls for users.

- Generate smooth transitions between prompts

- Customize video styles and themes

- Experiment with different interpolation settings